What is a data center switch?

A data center switch is a high-performance networking device used to manage data traffic between servers, storage devices, and other network components within a data center. They typically support high-speed connections (10G, 40G, 100G) to handle large volumes of data efficiently. These switches are crucial for maintaining the reliability and speed of internal communications within the data center.

How do data center switches differ from traditional network switches?

Unlike traditional network switches, data center switches are designed to handle higher data traffic volumes and ensure low latency in a large-scale infrastructure. They typically offer greater scalability, redundancy, and performance to support cloud services, virtualization, and big data applications commonly found in data centers.

What are the key features of a data center switch?

Key features include high throughput, low latency, scalability, fault tolerance, and support for advanced features like Software-Defined Networking (SDN). These switches often come with advanced security features, automation capabilities, and integration with network management systems for better control over data flow.

What is the role of Layer 2 and Layer 3 switching in a data center?

Layer 2 switching is responsible for forwarding frames based on MAC addresses, typically used for internal communication within a VLAN. Layer 3 switching enables routing based on IP addresses and is essential for inter-VLAN communication and routing traffic between different subnets in a data center environment.

What are the benefits of 100GbE data center switches?

100GbE (100 Gigabit Ethernet) switches provide extremely high bandwidth, reducing congestion and improving overall data transfer speeds. This is especially beneficial for modern data centers that handle massive data flows from cloud applications, AI workloads, and big data analytics, ensuring faster processing and reduced latency.

What is the difference between a managed and unmanaged switch?

A managed switch provides advanced features like traffic monitoring, VLAN support, and remote configuration, making it ideal for data centers where network performance and security are critical. An unmanaged switch, on the other hand, offers basic connectivity without management capabilities and is typically used in smaller, less complex networks.

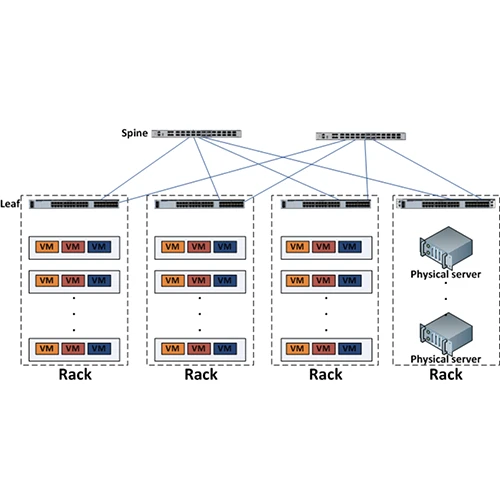

How does a spine-leaf topology work with data center switches?

Spine-leaf topology is a network design used in modern data centers to improve scalability and minimize latency. The spine switches form the backbone of the network, while the leaf switches connect directly to end devices. This architecture allows for efficient data distribution and ensures that traffic can take the shortest path, reducing bottlenecks.

What is the role of SDN in data center switching?

Software-Defined Networking (SDN) enables centralized control of the data center network, allowing for dynamic adjustments to traffic patterns. SDN in data center switches improves network management, increases automation, and enhances the ability to scale the network on-demand, all while reducing operational complexity.

How do data center switches support cloud computing?

Data center switches play a vital role in cloud computing by connecting the infrastructure needed to support cloud services. They ensure fast and reliable data transfer between servers, storage, and end-users, and they support virtualization technologies that allow for resource pooling and multi-tenancy, which are essential for cloud environments.

What is a fabric switch in a data center?

A fabric switch is a high-performance switch that connects multiple devices in a data center, forming a network fabric. It provides non-blocking, low-latency data transfer and is typically used in high-density environments where many devices need to be interconnected. These switches are key to ensuring a high-throughput, low-latency network backbone.

What are the main advantages of 400G data center switches?

400G data center switches offer even higher bandwidth than 100G, enabling faster data transmission for applications like machine learning, AI, and real-time analytics. They are particularly useful for large-scale data centers and cloud providers looking to keep up with growing data traffic demands while ensuring minimal latency.

What are VLANs and how are they used in data center switches?

VLANs (Virtual Local Area Networks) allow network segmentation, creating isolated networks within a data center. This improves security, reduces broadcast traffic, and enhances network management. Data center switches support VLANs by tagging traffic and ensuring that data is routed appropriately between different virtual networks.

How does Quality of Service (QoS) work in data center switches?

Quality of Service (QoS) prioritizes network traffic to ensure that critical applications receive the necessary bandwidth. Data center switches use QoS to manage latency, packet loss, and jitter, especially for time-sensitive applications like voice over IP (VoIP) and real-time video conferencing, by classifying and prioritizing traffic flows.

What is the purpose of PoE (Power over Ethernet) in data center switches?

PoE allows network cables to deliver both data and power to devices like IP cameras, wireless access points, and VoIP phones. In a data center, PoE-enabled switches streamline installation and reduce the need for separate power supplies, making the infrastructure more cost-effective and efficient.

How do data center switches handle high availability and redundancy?

Data center switches typically support high availability features such as redundant power supplies, fans, and network links. In addition, protocols like Spanning Tree Protocol (STP) and Virtual Router Redundancy Protocol (VRRP) ensure that if one component fails, traffic can still flow through alternative paths without disruption.

What are the common protocols used in data center switching?

Common protocols include VLAN, STP, TRILL, MPLS, and LACP. These protocols enable functions like link aggregation, network redundancy, and traffic management, ensuring that the data center network remains fast, reliable, and scalable.

How do data center switches support network security?

Data center switches offer features like access control lists (ACLs), port security, and MAC address filtering to protect against unauthorized access. Additionally, modern switches can integrate with security solutions like firewalls and intrusion detection systems (IDS) to further secure the network from internal and external threats.

What is a data center switch's role in load balancing?

Data center switches facilitate load balancing by distributing traffic across multiple servers or paths, ensuring no single device becomes a bottleneck. This is essential for optimizing network performance and reliability, particularly for high-demand services like cloud applications, web hosting, and online services.

What is the significance of latency in data center switches?

Latency refers to the delay in transmitting data between devices. Low latency is crucial in data centers, as delays can affect real-time applications, such as online gaming, video streaming, and financial transactions. Data center switches are designed to minimize latency to ensure fast, efficient data transfers.

How do data center switches enable virtualization?

Data center switches support virtualization by enabling features like virtual LANs (VLANs) and virtual switching. This allows multiple virtual machines (VMs) to share the same physical network infrastructure while maintaining isolation, improving resource utilization, and simplifying network management.

How does MPLS benefit data center switching?

Multiprotocol Label Switching (MPLS) improves the speed and efficiency of data transmission by using labels to forward data packets rather than relying on IP addresses. MPLS can optimize traffic flow in a data center network by providing better routing decisions and enabling services like traffic engineering and VPNs.

What is a port density in data center switches?

Port density refers to the number of network ports available on a switch. High port density is essential for data centers, as it allows for more devices to be connected to a single switch. This reduces the number of switches required and can help optimize space and power consumption in the data center.

How do data center switches scale?

Data center switches scale by supporting modular expansion or by allowing multiple switches to interconnect and form larger networks. Technologies like leaf-spine architectures and distributed switching enable data centers to grow while maintaining high performance, low latency, and minimal downtime.

What are the main factors to consider when selecting a data center switch?

Key factors include port speed, port density, scalability, reliability, latency, power consumption, and support for advanced features like SDN and automation. It’s also important to consider the specific needs of the data center, such as cloud connectivity, security features, and integration with existing infrastructure.

What are fabric interconnects in data center switches?

Fabric interconnects are high-speed connections between switches that form the backbone of a data center network. They enable fast data transfer between devices and ensure redundancy, fault tolerance, and scalability. Fabric interconnects are critical in large-scale data centers that require continuous, non-disruptive data flow.

What is link aggregation in data center switches?

Link aggregation combines multiple network connections to increase the overall bandwidth between devices. This technique is often used in data center switches to create higher-capacity links and ensure redundancy in case one link fails. Link aggregation helps avoid bottlenecks and improves network performance.

What is the role of ARP in data center switching?

The Address Resolution Protocol (ARP) is used by switches to map IP addresses to MAC addresses. In a data center, ARP enables switches to forward data to the correct destination device efficiently. It helps maintain smooth communication across a large number of servers and other network devices.

How do data center switches support multi-tenancy?

Multi-tenancy is a feature that allows multiple customers or services to share the same physical infrastructure while maintaining isolation. Data center switches support multi-tenancy by enabling features like VLANs and virtual routing, ensuring that different tenants can securely operate on the same network without interference.

What are the challenges in managing data center switches?

Challenges include handling large-scale networks with high traffic volume, ensuring network security, managing software updates and configurations, and maintaining high availability. Advanced management tools and automation solutions can help streamline these processes, reducing complexity and improving overall network reliability.

What is the role of telemetry in data center switches?

Telemetry allows real-time monitoring and collection of performance data from network devices. In data center switches, telemetry provides insights into traffic patterns, latency, packet loss, and other critical metrics, helping administrators quickly identify issues and optimize performance.

What are programmable switches in data centers?

Programmable switches allow administrators to customize their behavior using software to meet specific network requirements. This flexibility is particularly useful in data centers where dynamic adjustments to traffic flow, security policies, and network protocols are often required.

How do data center switches integrate with automation tools?

Data center switches can be integrated with automation tools such as Ansible, Chef, and Puppet to streamline configuration, monitoring, and maintenance tasks. This integration reduces human error, increases operational efficiency, and ensures consistent network configurations across the data center.

What is data center switch resiliency?

Resiliency refers to the ability of data center switches to maintain network availability and performance in the face of failures or disruptions. Features like redundant power supplies, hot-swappable components, and failover mechanisms contribute to resiliency, ensuring minimal downtime during hardware failures or network outages.

How do data center switches handle traffic prioritization?

Data center switches prioritize traffic using techniques like QoS, traffic shaping, and scheduling. These methods ensure that critical applications, such as VoIP or real-time video, receive the necessary bandwidth and low latency, while less time-sensitive traffic is delayed or queued.

What is a data center switch's role in network segmentation?

Network segmentation involves dividing a network into smaller, isolated sections to improve security and performance. Data center switches enable segmentation through features like VLANs, which ensure that traffic is properly isolated between different departments, applications, or tenants within the data center.

How does port mirroring work in data center switches?

Port mirroring allows network traffic from one port to be copied to another for monitoring or troubleshooting purposes. In a data center, this feature is used to capture and analyze traffic flows without interrupting the normal operation of the network.

How do data center switches optimize traffic flow?

Data center switches use algorithms like Equal Cost Multi-Path (ECMP) to distribute traffic evenly across multiple paths, optimizing throughput and reducing congestion. This ensures that no single link is overwhelmed, helping maintain low latency and high performance in busy data center environments.

What is the role of BGP in data center switching?

Border Gateway Protocol (BGP) is used to exchange routing information between switches and other routers in a data center. BGP enables efficient routing, redundancy, and load balancing, ensuring optimal traffic flow across the data center network.

How do data center switches support hybrid cloud environments?

Data center switches support hybrid cloud environments by providing the necessary connectivity and flexibility to connect on-premises infrastructure with cloud resources. They enable seamless communication between private data centers and public cloud services, ensuring that workloads can be migrated or balanced between environments.

What are the energy efficiency considerations in data center switches?

Energy efficiency is a critical consideration in data centers, where power consumption can be substantial. Modern data center switches are designed with energy-saving features such as low-power modes, efficient cooling systems, and reduced power draw during idle times, helping data centers manage their energy costs more effectively.

What is an optical switch in a data center?

An optical switch uses light to route data within the network, providing high-speed, low-latency data transmission. Optical switches are used in data centers to enhance performance, particularly in high-bandwidth applications, by reducing the need for electrical signal conversion and improving scalability.

How do data center switches handle IPv6 traffic?

Data center switches support IPv6 by providing routing, addressing, and forwarding capabilities for IPv6 packets. This ensures that modern applications and services can continue to operate smoothly as the internet transitions from IPv4 to IPv6.

What is the role of DNS in data center switching?

DNS (Domain Name System) allows switches and other network devices to resolve domain names to IP addresses. In a data center, DNS ensures that devices can quickly locate and communicate with each other, reducing delays and improving overall network performance.

How do data center switches improve fault tolerance?

Fault tolerance is achieved through features like redundant power supplies, multi-path routing, and automatic failover mechanisms. Data center switches are designed to quickly reroute traffic and maintain network connectivity in case of hardware failure, minimizing service disruption.

What are the limitations of traditional data center switches?

Traditional data center switches may struggle to handle the growing demands of high-bandwidth applications, cloud services, and virtualization. They can become bottlenecks if not properly scaled or if they lack advanced features like SDN, automation, or high-speed interfaces.

How do data center switches handle multicast traffic?

Data center switches use protocols like IGMP (Internet Group Management Protocol) and PIM (Protocol Independent Multicast) to efficiently manage multicast traffic, ensuring that data is only sent to devices that need it, reducing network congestion.

What is LACP in data center switches?

Link Aggregation Control Protocol (LACP) is used to combine multiple physical links into a single logical link, increasing bandwidth and providing redundancy. LACP ensures that if one link fails, traffic can continue to flow over the remaining links, maintaining network stability and performance.

What are the security risks associated with data center switches?

Security risks include unauthorized access, DoS (Denial of Service) attacks, and data breaches. Data center switches can mitigate these risks by implementing encryption, access control, and regular firmware updates to protect against vulnerabilities.

How do data center switches manage multicast traffic?

Data center switches handle multicast traffic using protocols like IGMP and PIM. These protocols enable the efficient delivery of multicast data streams, ensuring that only devices subscribed to the multicast group receive the data, reducing unnecessary network load.

How do data center switches support hybrid IT environments?

Data center switches support hybrid IT environments by enabling seamless integration between on-premise and cloud-based systems. Through high-speed interconnections, software-defined networking, and virtualization, data center switches ensure that hybrid infrastructures remain highly available, secure, and efficient.

Internet Data Center

Internet Data Center FAQs

FAQs Industry News

Industry News About Us

About Us Data Center Switch

Data Center Switch  Enterprise Switch

Enterprise Switch  Industrial Switch

Industrial Switch  Access Switch

Access Switch  Integrated Network

Integrated Network  Optical Module & Cable

Optical Module & Cable

Call us on:

Call us on:  Email Us:

Email Us:  2106B, #3D, Cloud Park Phase 1, Bantian, Longgang, Shenzhen, 518129, P.R.C.

2106B, #3D, Cloud Park Phase 1, Bantian, Longgang, Shenzhen, 518129, P.R.C.